In 2025, disinformation campaigns, particularly through AI-generated content like viral videos, reached unprecedented levels, blurring the line between reality and fake. These campaigns were utilized to manipulate opinions on key issues such as Russia’s war in Ukraine and political figures like French President Emmanuel Macron.

Disinformation thrived in political contexts, especially during elections in Brazil and Moldova, and targeted figures like Ukraine’s President Zelenskyy. Health misinformation emerged, with detrimental claims such as certain foods affecting skin color during pregnancy, and vaccine misinformation incited violence in Pakistan.

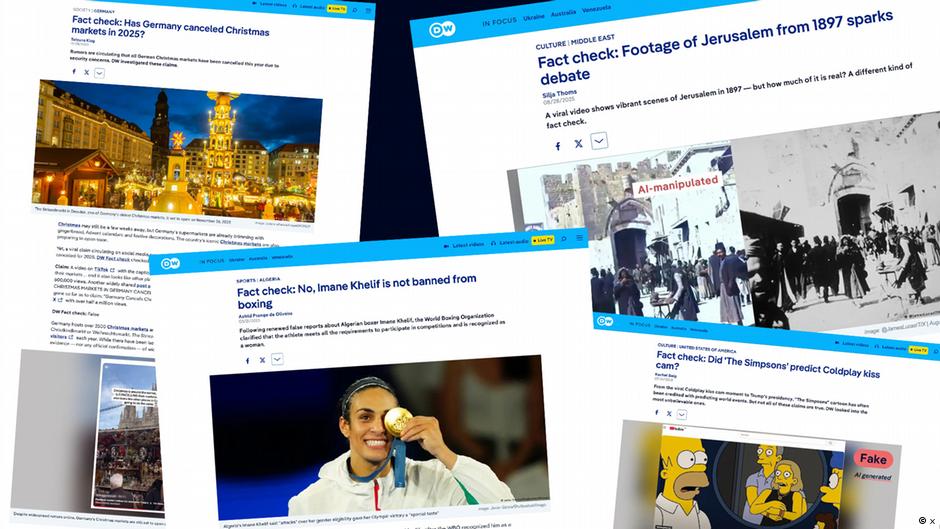

Climate change narratives were distorted, leading to misconceptions about Antarctic ice growth. In technology, the use of generative AI for fact-checking rose, though these tools often failed to provide accurate information. Disinformation also permeated discussions on controversial topics like transgender athletes and historical events, with various misleading claims circulating online.

Collaboration among fact-checking organizations became essential in combating these false narratives, especially related to elections and international propaganda. AI-generated content proliferated, making detection of fakes increasingly challenging. Despite the rise of tools like AI chatbots, human expertise remained crucial for effective fact-checking. As the landscape evolves, it’s vital for individuals to learn how to differentiate between genuine and AI-generated content.