On December 26, 2025, Science Feedback, along with a consortium of European fact-checkers, published findings from the SIMODS project, marking the first extensive cross-platform measurement of disinformation across six major social media platforms in the EU. The study analyzed about 2.6 million posts with around 24 billion views from platforms like Facebook, Instagram, LinkedIn, TikTok, X/Twitter, and YouTube in France, Poland, Slovakia, and Spain.

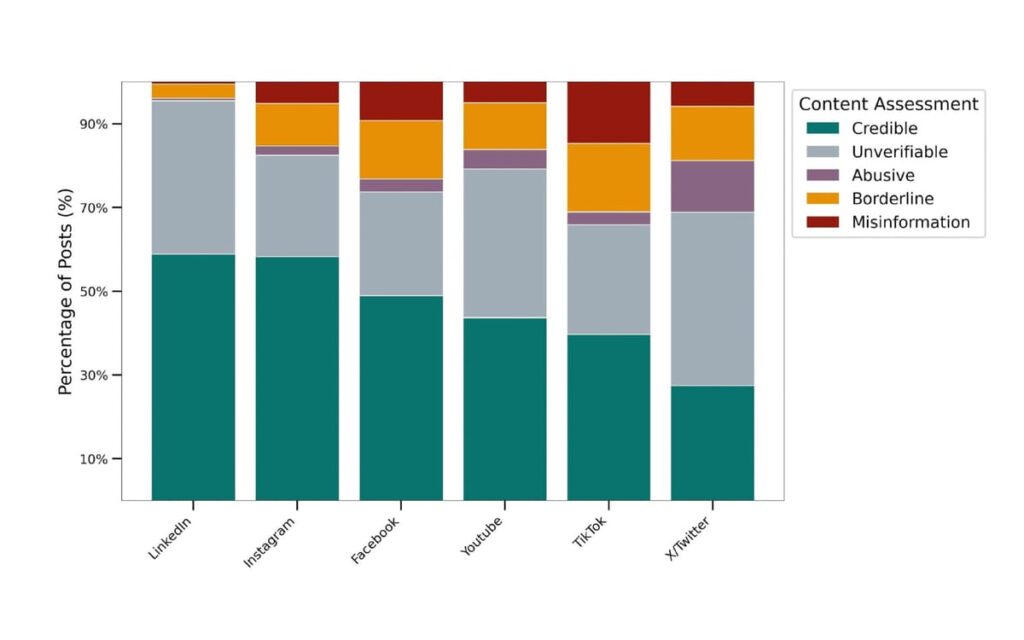

TikTok had the highest rate of misinformation at 20%, where one in five posts contained misleading or false information on key topics such as health and politics. Facebook was next at 13%, followed by X/Twitter at 11%. YouTube and Instagram showed around 8%, while LinkedIn had the lowest at 2%.

The research represents a significant methodological advancement, as previous studies lacked the necessary scale. Professional fact-checkers examined posts published from March 17 to April 13, 2025, using robust annotation protocols. The study revealed that when including borderline content and hate speech, the prevalence of problematic content was highest on TikTok and X/Twitter.

This study arrives amid notable policy changes by platforms, many of which are stepping back from their commitments to combat misinformation, often attributed to political pressure. The report highlights that low-credibility accounts attract significantly more engagement compared to credible sources, indicating a systemic amplification of misinformation across most platforms.

Health misinformation made up the majority of false posts (43.4%), followed by the Russia-Ukraine war (24.5%) and national politics (15.5%). Monetization of misinformation was noted, revealing that low-credibility channels on YouTube and Facebook also attracted ad revenue.

Transparency issues hindered a full understanding of the situation, with platforms like TikTok and X/Twitter denying data access requests. The study aims to inform EU policies regarding the Code of Practice on Disinformation and emphasizes the need for better data access for independent research.

Overall, the research underscores the prevalence of misleading content across platforms and calls for systematic, evidence-based measurement to hold platforms accountable and protect users’ rights to accurate information. A second measurement is planned for early 2026.