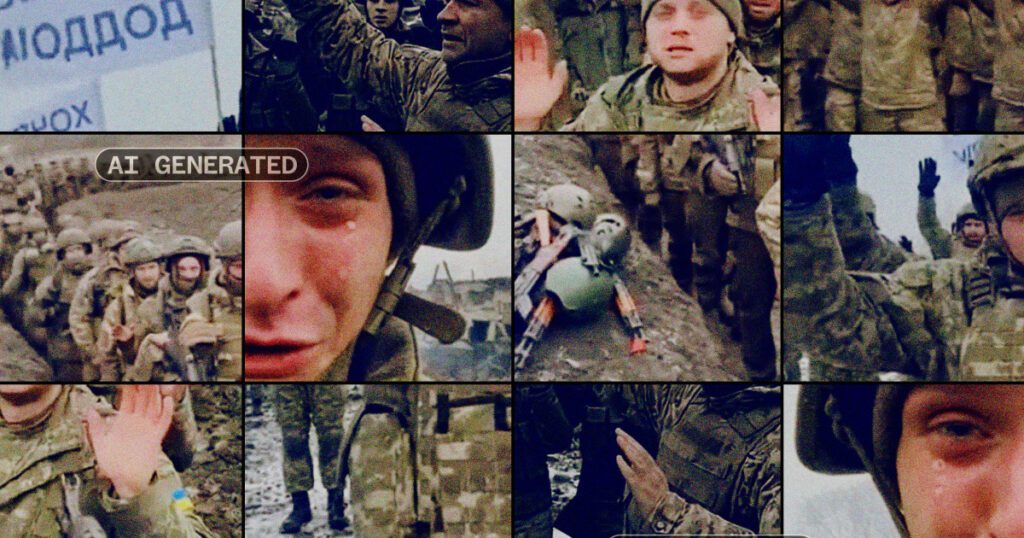

The article discusses the spread of misleading videos on platforms like YouTube, TikTok, Facebook, and X, which aim to depict Ukrainian soldiers as unwilling to fight. These videos are part of a sophisticated disinformation campaign related to Russia’s war in Ukraine. While the source of these videos is unclear, they reflect a trend toward more refined and harder-to-detect misinformation. Experts, including Russian influence analyst Alice Lee from NewsGuard, highlight that videos created using tools like OpenAI’s Sora 2 are particularly challenging to identify as fake, as they often lack visual inconsistencies.

OpenAI has acknowledged that while it has implemented some safeguards to prevent the generation of disinformation, such measures may not be completely effective. NewsGuard’s research indicates that Sora 2 generated realistic but false claims 80% of the time in tests. Videos produced using Sora have been found to show fabricated statements and scenarios, gaining substantial views due to their sensational nature.

Despite company policies against graphic violence, examples of Sora-generated content depicting violent imagery have surfaced. Major platforms like TikTok and YouTube are actively working to remove such content, but these videos often resurface as reposts on other social media sites. Experts warn that as AI technology advances, it will become increasingly difficult for viewers to differentiate between real and fabricated content, urging consumers to remain critical of the information they encounter online.